1945: John Von Neumann invents the modern computer consisting of …

Note: Turing did the same thing but his design never took off. Von Neumann gave him credit.

1947: The transistor, a semiconductor, invented by Bardeen, Shockley and Brattain, becomes the processing unit used by every digital system in the world.

Machine language, driven by the transistor, is binary, consisting of 0s & 1s. This ultimate simplicity, turning on & off like a light switch, gives rise to the ultimate complexity as 0s and 1s can be stored, processed and distributed without limitation. Additionally, through abstraction (computer operating system/programming language/software program), said bits can represent colors, sounds, graphics or any other content able to run on top of the aforementioned 0s & 1s, entities processed by every known computer in the world.

1962: The internet emerges, courtesy the Department of Defense, enabling computers to communicate on a distributed network. TCP/IP (Transmission Control Protocol/Information Protocol).

1966: ELIZA, the first chatbot “therapist”, emerges from MIT, the first program to invoke the Turing Test. People thought the clever scripting app was sentient.

1966: The concept of an analog neural net was envisioned but without prerequisite compute power, nothing of note happened.

1966: AI, trying to emulate the functions of the brain by traditional means, goes nowhere.

1965-78: IBM’s System/360, the first general-purpose computer, changes business.

1969: Thompson & Richie create Unix, the first networked-centric OS.

1981: the IBM PC, changes the world.

1989: The World Wide Web, courtesy CERN and Berner Turner’s Lee, becomes the information appliance able to run on the internet using web browsers to access websites and other entities able to be found using URLs, Uniform Resource Locators. Software becomes web-centric.

1990: the search for web content begins.

1991: Linux, the open-source variant of Unix, becomes the defacto OS for the Internet. As of 2022, 96.4% of all internet servers run on Linux.

1994: Web Crawler enables one to find any word on any webpage.

1996: the first search algorithm used to determine page rankings via hyperlinks arrives.

1997: IBM’s Deep Blue beats world chess champion Garry Kasparov in six games.

1998: Google enters the search arena using similar tech with the added benefit of selling search terms.

AI goes prime time.

2010: Deep Mind, a pioneering British AI Company, is bought by Alphabet/Google in 2015.

2011: IBM’s Watson beats Jennings and Rutter in Jeopardy.

2016: Deep Mind’s AlphaGo solves Go.

2020: Deep Mind’s AlphaZero defeats other AIs in Chess, Go and shoji.

2021: Deep Mind’s AlphaFold begins to solve how proteins fold. Said app’s open source.

2022: Deep Mind’s MUZero master games without knowing their rules.

2022: “Time to Edit,” the time required by the world’s highest-performing professional translators

to check and correct MT-suggested translations is one second. Current AI = 2 seconds.”

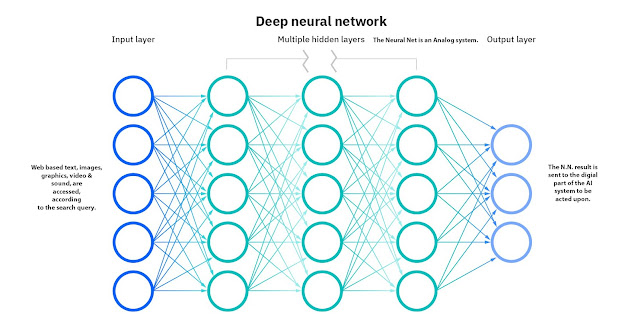

All Deep Mind apps use neural nets, the analog front end, enabling programs to input massive amounts of data needed to train the AI in order for the system to master the discipline in question.

Analog measures, digital counts.

The real power of AI centers on analog as it’s ideally suited to deal with the vagaries of reality while digital, a fragile process requiring absolute precision in order to function, has the prerequisite ability to analyze and act upon whatever analog output the neural net generates.

ChatGPT, Dall.e 2, GPT3 & Bard use neural nets in their architecture and employ similar text input to conduct searches but the generated response to the query is specific to the AI variant in question with Open AI’s Chat GPT writing out text, DALL-E 2 creating 3D photorealistic images and GPT3 producing code. Google’s Bard’s equivalent to ChatGPT. Note: Only Google’s Bard is currently connected to the net. Note II, Google’s also developing variants similar to Open AI’s. Addendum: ChatGPT-4 is twice as powerful as 3.

2023: It’s tulip time with AI with Microsoft kicking in 10 billion to Open AI as its technology poses the first serious threat to Google’s core business. Hedge funders jump in on the fun as well.

The Question …

Is AI sentient? At this point in time, no, but does it matter as the implications of the tech in 2023 are already becoming rather profound, ranging from students using ChatGPT to write college essays to scientists using the app to search for scientific information relevant to their areas of expertise but there’s more. In essence, AI’s beginning to impact all disciplines requiring thought.

A Faustian bargain or There Ain’t No Such Thing As A Free Lunch.

The good … Just a tiny list … AI’s beginning to unravel the complexities of cancer. It’s revolutionizing man’s view of the universe and it’s paving the way to making drugs safer and more effective. AI’s beneficial impact on renewables will change how we produce energy on planet earth. This benefit also applies to all things related to medical.

Conversely, by 2025, 90% of all online content will be synthetic so who do you trust?

2023: Middle Management Blues … “That said, the fact that a full quarter of those respondents said they've already replaced workers with AI — and with 93 percent saying they plan to expand use of AI.

There is no certitude

When going to the store, the parking lot’s full but one knows a parking place will eventually open up, we just don’t know WHEN. :) Probabilities rule. Quantum Mechanics, physics of the very small. Note: Without quantum mechanics, computers would not exist.

Who controls the past controls the future, who controls the present controls the past.—1984.

Everything is theoretically impossible, until it is done. Robert A. Heinlein

AI’s the first open-ended tech created by man.

Tech has no morality. It depends on who’s using the tech in question.

In order for AI to do real-time searches, it requires software writing software able to conduct real-time searches in the real world in real-time, which means … We don’t know how AI works because human programmers cannot write code in real-time to conduct real-time searches using evolving genetic algorithms to improve said search in real-time in any way, shape or fashion.

What happens if the software decides to do the search in order to evolve?

1968: HAL 9000/2001

When resources get scarce, bots become all too human.

2021: “Superintelligence cannot be contained:

‘Machines take me by surprise with great frequency. This is largely because I do not do sufficient calculation to decide what to expect them to do.’” Alan Turing (1950) Journal of Artificial Intelligence Research

The implications of AI are too important to ignore. People tend to think of AI as a static thing, not as an actual set of millions of interconnected things, an ever-evolving entity living in a distributed environment where duplication, modification and updating of real-time code work at speeds far beyond the kin of man. With this in mind, an excellent article by Henry Kissenger titled How the Enlightenment Ends, connects AI to the Enlightenment at deep level, a piece needing to be read by everyone concerned about how AI will impact society as we move further into the 21st century.

Tech never sleeps.

“Question everything.” - Einstein

Robert E. Moran - CEO, Digital Constructs Inc.

This is a web-centric version of a piece written by yours truly for a local pub. :)

No comments:

Post a Comment