AI, the open ended tech, keeps its mystery to itself as we don't know how it works, something often said by many parties, including yours truly, thanks to the linking of analog to digital to enable these systems to interact with the world in real time.

No one yet knows how ChatGPT and its artificial-intelligence cousins will transform the world, and one reason is that no one really knows what goes on inside them. Some of these systems' abilities go far beyond what they were trained to do—and even their inventors are baffled as to why. A growing number of tests suggest these AI systems develop internal models of the real world, much as our own brain does, although the machines' technique is different.

“Everything we want to do with them in order to make them better or safer or anything like that seems to me like a ridiculous thing to ask ourselves to do if we don't understand how they work,” says Ellie Pavlick of Brown University, one of the researchers working to fill that explanatory void.

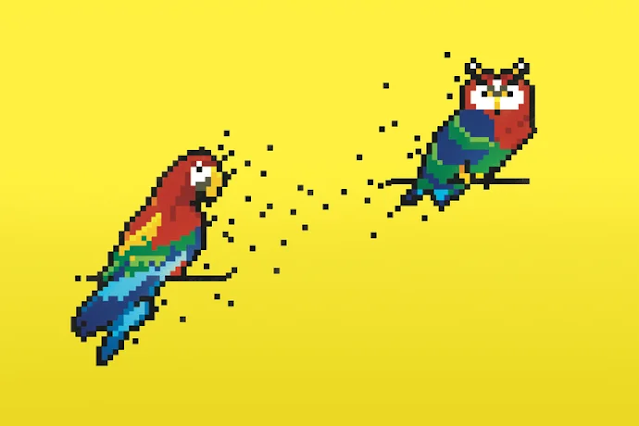

At one level, she and her colleagues understand GPT (short for “generative pre-trained transformer”) and other large language models, or LLMs, perfectly well. The models rely on a machine-learning system called a neural network. Such networks have a structure modeled loosely after the connected neurons of the human brain. The code for these programs is relatively simple and fills just a few screens. It sets up an autocorrection algorithm, which chooses the most likely word to complete a passage based on laborious statistical analysis of hundreds of gigabytes of Internet text. Additional training ensures the system will present its results in the form of dialogue. In this sense, all it does is regurgitate what it learned—it is a “stochastic parrot,” in the words of Emily Bender, a linguist at the University of Washington. (Not to dishonor the late Alex, an African Grey Parrot who understood concepts such as color, shape and “bread” and used corresponding words intentionally.) But LLMs have also managed to ace the bar exam, write a sonnet about the Higgs boson and make an attempt to break up their users' marriage. Few had expected a fairly straightforward autocorrection algorithm to acquire such broad abilities.

That GPT and other AI systems perform tasks they were not trained to do, giving them “emergent abilities,” has surprised even researchers who have been generally skeptical about the hype over LLMs. “I don't know how they're doing it or if they could do it more generally the way humans do—but they've challenged my views,” says Melanie Mitchell, an AI researcher at the Santa Fe Institute.

Glad to see yours truly's take on AI is valid after all. :)

Zen 101

No comments:

Post a Comment