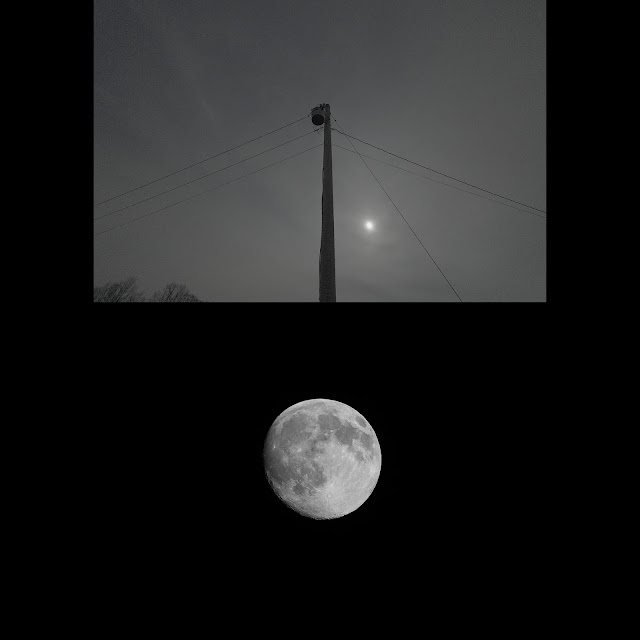

11:00PM ...

Why this image when this blurb's about AI, the open ended tech never to be fully understood as it takes real time software to react to realtime input, something not possible unless realtime evolving software is rewriting itself in realtime in order to cope with said realtime input. With this image as indirect reference, it stands to reason people who build this code are getting rather nervous to say the least as said software is evolving at speeds beyond the kin of man. With this being said, anybody who states AI will never become sentient assumes everything will remain as it is. Well, we all know how turns out, right?

To answer why 11:00PM is the cover page for this blurb centers on the fact the lack of sanguine vibes of AI as a possible dark harbinger of the future reflects, indirectly, with the quasi dark nature of this piece although the summer night in question, when this pix was taken, was truly stellar as it was the prelude to a hurricane fortunately passing us by back in 2021. :)

To give you some of the background: The most successful AI models today are known as GANs, or Generative Adversarial Networks. They have a two-part structure where one part of the program is trying to generate a picture (or sentence) from input data, and a second part is grading its performance. What the new paper proposes is that at some point in the future, an advanced AI overseeing some important function could be incentivized to come up with cheating strategies to get its reward in ways that harm humanity.

“Under the conditions we have identified, our conclusion is much stronger than that of any previous publication—an existential catastrophe is not just possible, but likely,” Cohen said on Twitter in a thread about the paper.

"In a world with infinite resources, I would be extremely uncertain about what would happen. In a world with finite resources, there's unavoidable competition for these resources," Cohen told Motherboard in an interview. "And if you're in a competition with something capable of outfoxing you at every turn, then you shouldn't expect to win. And the other key part is that it would have an insatiable appetite for more energy to keep driving the probability closer and closer."

Verticality

No comments:

Post a Comment